PAOC Spotlights

New Climate Model to be Built from the Ground Up

The following news article is adapted from a press release issued by Caltech, in partnership with the MIT School of Science, the Naval Postgraduate School, and the Jet Propulsion Laboratory.

Facing the certainty of a changing climate coupled with the uncertainty that remains in predictions of how it will change, scientists and engineers from across the country are teaming up to build a new type of climate model which will provide more precise and actionable predictions.

Leveraging recent advances in the computational and data sciences, the comprehensive effort capitalizes on vast amounts of data that are now available and on increasingly powerful computing capabilities both for processing data and for simulating the Earth system.

The new model will be built by a consortium of researchers led by Caltech, in partnership with MIT; the Naval Postgraduate School (NPS); and Jet Propulsion Laboratory (JPL), which Caltech manages for NASA. Dubbed the Climate Modeling Alliance (CliMA), it plans to fuse Earth observations and high-resolution simulations into a model that represents important small-scale features, such as clouds and turbulence, more reliably than existing climate models. The goal is a climate model that projects future changes in critical variables such as cloud cover, rainfall, and sea ice extent with uncertainties at least two times smaller than existing models.

|

The MIT team's role in CliMa At MIT, Professors Raffaele Ferrari and John Marshall, both Cecil and Ida Green Professors of Oceanography and members of the Program in Atmospheres, Oceans and Climate (PAOC), will develop the ocean and sea-ice component of the CliMa climate model, leveraging their expertise in the development of ocean models (the MITgcm was spearheaded by Marshall, with Chris Hill and Jean Michel Campin as key developers) and in the representation of sub-gridscale mixing processes in the ocean (Ferrari and Marshall). To take advantage of new computer architectures, languages, and machine learning techniques, the team has partnered with Alan Edelman's group in CSAIL at MIT, who will help write the new generation climate model in the Julia computing language developed by the group. This will enable the MIT team to target GPUs, CPUs, and evolving computer architectures within one code base. The strategy of the CliMa group is, to the extent that is possible, to develop common hydro-dynamical cores, parameterizations, and machine-learning techniques for both atmosphere and ocean, so that development in one fluid can inform the other. |

"Projections with current climate models—for example, of how features such as rainfall extremes will change—still have large uncertainties, and the uncertainties are poorly quantified," says Tapio Schneider, Caltech's Theodore Y. Wu Professor of Environmental Science and Engineering, senior research scientist at JPL, and principal investigator of CliMA. "For cities planning their stormwater management infrastructure to withstand the next 100 years' worth of floods, this is a serious issue; concrete answers about the likely range of climate outcomes are key for planning."

The consortium will operate in a fast-paced, start-up-like atmosphere, and hopes to have the new model up and running within the next five years—an aggressive timeline for building a climate model essentially from scratch.

"A fresh start gives us an opportunity to design the model from the outset to run effectively on modern and rapidly evolving computing hardware, and for the atmospheric and ocean models to be close cousins of each other, sharing the same numerical algorithms," says Frank Giraldo, professor of applied mathematics at NPS.

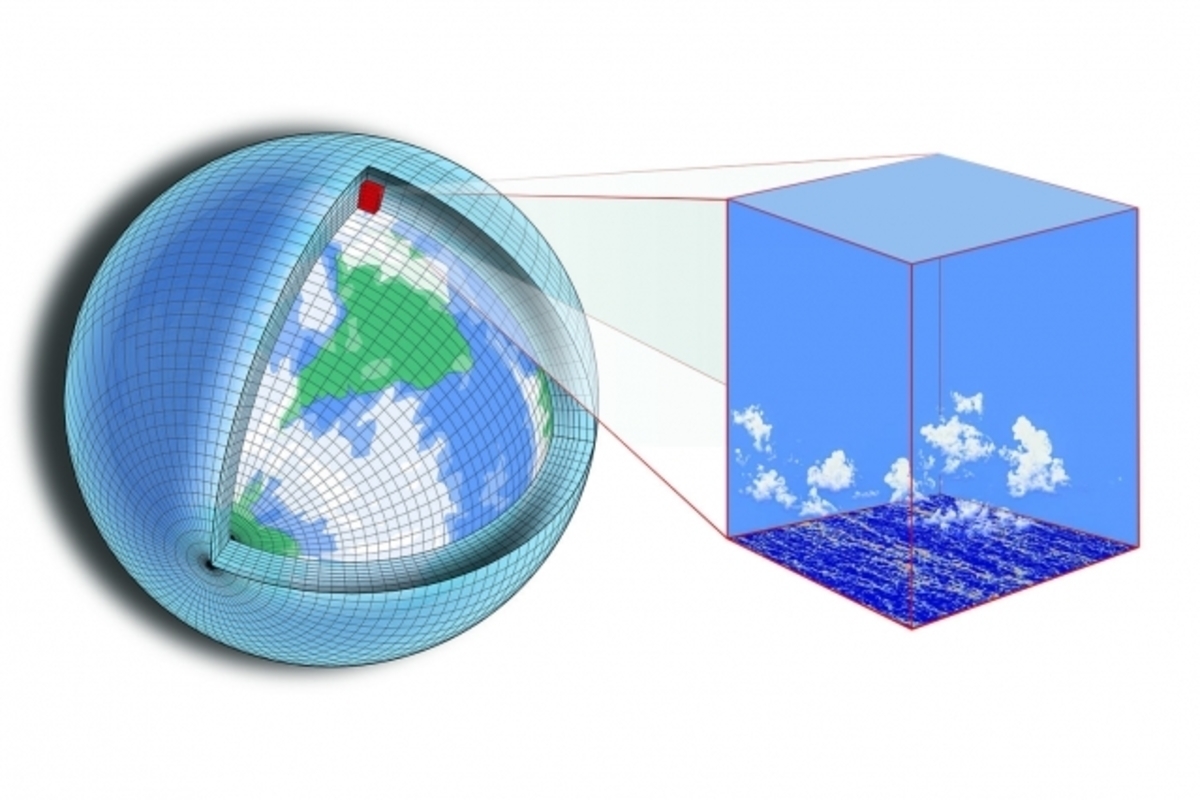

Current climate modeling relies on dividing up the globe into a grid and then computing what is going on in each sector of the grid as well as figuring out how the sectors interact with each other. The accuracy of any given model depends in part on the resolution at which it can view the Earth—that is, the size of the grid's sectors. Limitations in available computer processing power mean that those sectors generally cannot be any smaller than tens of kilometers per side. But for climate modeling, the devil is in the details—details that get missed in a too-large grid.

For example, low-lying clouds have a significant impact on climate by reflecting sunlight, but the turbulent plumes that sustain them are so small that they fall through the cracks of existing models. Similarly, changes in Arctic sea ice have been linked to wide-ranging effects on everything from polar climate to drought in California, but it is difficult to predict how that ice will change in the future because it is sensitive to the density of cloud cover above and the temperature of ocean currents below, both of which cannot be resolved by current models.

To capture the large-scale impact of these small-scale features, the team will develop high-resolution simulations that model the features in detail in selected regions of the globe. Those simulations will be nested within the larger climate model. The effect will be a model capable of "zooming in" on selected regions, providing detailed local climate information about those areas and informing the modeling of small-scale processes everywhere else.

"The ocean soaks up much of the heat and carbon accumulating in the climate system. However, just how much it takes up depends on turbulent eddies in the upper ocean, which are too small to be resolved in climate models," says Raffaele Ferrari, Cecil and Ida Green Professor of Oceanography at MIT. "Fusing nested high-resolution simulations with newly available measurements from, for example, a fleet of thousands of autonomous floats could enable a leap in the accuracy of ocean predictions.

While existing models are often tested by checking predictions against observations, the new model will take ground-truthing a step further by using data-assimilation and machine-learning tools to "teach" it to improve itself in real time, harnessing both Earth observations as well as the nested high-resolution simulations.

"The success of computational weather forecasting demonstrates the power of using data to improve the accuracy of computer models; we aim to bring the same successes to climate prediction," says Andrew Stuart, Caltech's Bren Professor of Computing and Mathematical Science.

Each of the partner institutions brings a different strength and research expertise to the project. At Caltech, Schneider and Stuart will focus on creating the data-assimilation and machine-learning algorithms, as well as models for clouds, turbulence, and other atmospheric features. At MIT, Ferrari and John Marshall, also a Cecil and Ida Green Professor of Oceanography, will lead a team that will model the ocean, including its large-scale circulation and turbulent mixing. At NPS, Giraldo, will lead the development of the computational core of the new atmosphere model in collaboration with Jeremy Kozdon and Lucas Wilcox. At JPL, a group of scientists will collaborate with the team at Caltech's campus to develop process models for the atmosphere, biosphere, and cryosphere.

Funding for this project is provided by the generosity of Eric and Wendy Schmidt (by recommendation of the Schmidt Futures program), Mission Control for Earth, Paul G. Allen Philanthropies, Caltech trustee Charles Trimble, and the National Science Foundation.