PAOC Spotlights

Historical Climate Models Accurately Projected Global Warming

New research from MIT EAPS scientists explores the accuracy of models’ climate physics and their relationship between greenhouse gas emissions and temperature rise.

While scientists have known about the warming effect of greenhouse gases since the late 1800s, it was not until the advent of computerized climate models in the 1960s that the science became truly quantitative. The first of these models were one-dimensional, solving only for the variation of Earth’s atmosphere with altitude, averaged over the whole globe. While these first models are simple by today’s standards – that we now refer to them as “toy models” – they still accurately reproduced the general structure of Earth’s atmosphere and provided revolutionary scientific insights on climate and weather. Since then, models across the board have improved dramatically, along with their ability to predict global warming trends, and their relationship to carbon emissions.

New research recently published in the journal Geophysical Research Letters (GRL) evaluated how well climate models published after the early 1970s could project the future average temperature of Earth’s surface as well as the relationship between anthropogenic emissions and warming. Here, my colleagues, MIT EAPS graduate student Tristan Abbott, UC Berkley graduate student Zeke Hausfather, and Gavin Schmidt of NASA Goddard, and I found that most of the models have done incredibly well over the years.

History of climate modeling

One of the first computer simulations of climate that scientists carried out was to instantly double carbon dioxide concentrations to see what happened once the simulated climate had readjusted. This was motivated by the rapidly increasing emissions of the greenhouse gas carbon dioxide in reality. The researchers generally found that the planet warmed by about 2.5 ºC (or 4.5 ºF) for a doubling of carbon dioxide concentrations, a value which is still consistent with today’s best estimates.

As the technology progressed and demand for more policy-relevant climate information grew, climate modelers shifted away from these hypothetical “instantaneous doubling” scenarios and developed plausible scenarios for the amount of future emissions in a given year. They then used their models to estimate the corresponding future global warming.

Around the same time, increased computer power allowed climate modelers incorporate dynamical features of the Earth system using equations of geophysical fluid motion based on fundamental physical principles. In doing so, they could expand their simulations from one dimension (altitude) to three dimensions (altitude, longitude, and latitude) and explicitly represent Earth’s continents, mountains, ice caps, and oceans in their simulations. As a result of differential solar heating between the tropics and the poles, and Earth’s rotation, realistic features like atmospheric winds and ocean currents emerged from the basic fluid mechanical equations encoded in the simulations. In addition to the global warming signal of greenhouse gas emissions, new signals emerged from natural variations in the atmosphere-ocean system. Features like the El Niño Southern Oscillation, which shows periodic warming in the tropical eastern Pacific Ocean’s sea surface, is part of Earth’s background climate system.

To climate modelers interested in the human-caused signal of global warming, these natural temperature variations were essentially noise that obscured the human-caused signal. When the first of these simulations were published in the late 1980s, scientists realized that they would have to wait several decades for the future human-caused warming signal in globally-averaged temperature measurements to emerge above the noise of natural variability.

Evaluating a climate model’s performance

A couple years ago, as a first-year graduate student recently introduced to classic climate modeling papers, it occurred to me that the performance of these models could now be assessed for their accuracy. Enough time had likely passed since these historical projections were published that their warming signals would have emerged, and we could now evaluate them against several decades of global temperature measurements. Several researchers have evaluated model projections against observations in blogs posts and other informal contexts, but there were no comprehensive, peer-reviewed studies on the topic. Zeke Hausfather, who had produced the closest thing to a comprehensive evaluation of historical models at the time, initiated the project and assembled a team of investigators to survey all known quantitative historical climate model projections of global warming and check how stacked up against reality. The team consisted of Zeke Hausfather, myself, my colleague Tristan Abbott at MIT, who studies atmospheric convection and clouds, and Gavin Schmidt, who leads NASA Goddard Institute for Space Studies and oversees the development of their climate model.

Our survey began with a literature review of every peer-reviewed reference we could find. For each reference, we either tracked down the original model data, reproduced the data from text, formulas, and tables, or, as a last resort, we used digitized the data the figures. Then, we compared the projected warming rates from the models against several estimates of historical warming from a dense global network of temperature measurements with excellent coverage over the past fifty years. We also recorded the rate at which greenhouse gas concentrations (and other human-emitted climate agents) changed in both the model projections and reality.

The first result we found is that all of the 17 models correctly projected global warming (as opposed to either no warming or even cooling). While this is so unsurprising to climate scientists that it is not even mentioned in the paper, it may be surprising to non-experts. The second result is that most of the model projections (10 out of 17) published between 1970 and 2000 produced global average surface warming projections that were quantitatively consistent with the observed warming rate.

While comparing global warming rates may seem like a straight-forward “apples to apples” comparison, it sweeps one very important difference between the simulated climate projections and reality under the rug: human behavior. Climate modelers have to guess at plausible future scenarios of anthropogenic carbon dioxide emissions to feed into the model. Some modelers developed projections meant to be realistic predictions of what society would actually do (e.g. Nordhaus’ 1977 coupled climate-economic model), while others chose to estimate “worst-case” (high emissions) and “best-case” (low emissions) scenarios to bracket all plausible realities.

When conducting our comparison, given this information, it became clear to us that simply comparing the warming rates between simulations and reality could be misleading, if the simulated emissions scenario and historical emissions were dramatically different. To account for differences in emissions between the simulations and reality, we calculated the warming rates with respect to anthropogenic radiative forcing, the rate at which human emissions trap energy at Earth’s surface, instead of calculating them with respect to time. Using this novel metric of the warming rate, we found that the model projections were even more consistent with reality (14 out of 17 models captured this).

Unfortunately, understanding the full effects of climate change, as predicted by climate models, depends on more than just increasing global surface temperatures. Historically, climate scientists have done a great job of capturing large and mesoscale physics of the Earth. This has allowed researchers, like my colleagues and I, to make incredibly accurate predictions, which rely on understanding and mathematically characterizing a complex, interconnected Earth system. Additionally, climate science is based on more than just state-of-the-art computer models: measurements of the present climate, paleoproxy evidence from past climates, and fundamental theory provide additional constraints on future warming and bolster our confidence in the field’s findings. However, future climate change will not be experienced the same around the world—this is next frontier for climate researchers. Climate impacts are nuanced and are experienced at smaller, regional scales, for which different models often still disagree. This is because different models may contain slightly different characterizations of the Earth’s physics, particularly small-scale features that do not last long but can make a big impact, like clouds. These slight differences in calculations, when spread over time and space, have the potential to cancel out or amplify other climate effects.

The results from this paper increase our confidence in climate models in that they have accurately projected the most basic climate metric through history: global warming. By many measures, today’s climate models are much more useful and skillful than the historical models reviewed here. This adds to our confidence in today’s state-of-the-art model projections, which climate scientists will continue to improve upon and use to pin down the specifics of climate change’s effects.

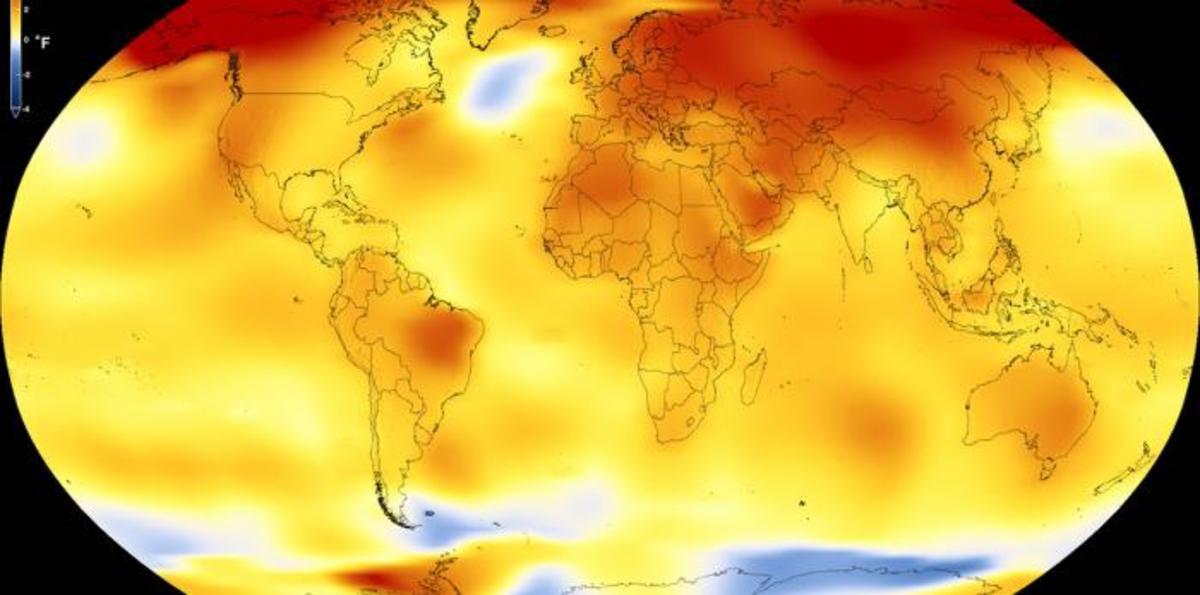

Story Image: This map shows Earth’s average global temperature from 2013 to 2017, as compared to a baseline average from 1951 to 1980, according to an analysis by NASA’s Goddard Institute for Space Studies. Yellows, oranges, and reds show regions warmer than the baseline. (Credit: NASA’s Scientific Visualization Studio)