Amy Phung: Automating exploration

Amy Phung: Automating exploration

Read this at WHOI

From her apartment in Cambridge, Amy Phung can see the future. Armed with two controllers, she moves her arms as deliberately as a mime, her face obscured by a virtual reality headset. As part of a NOAA Ocean Exploration research cruise, she’s controlling the hybrid remotely operated vehicle (HROV) Nereid Under Ice (NUI) as it takes a push-core sample from the sea floor–over 3,000 miles away.

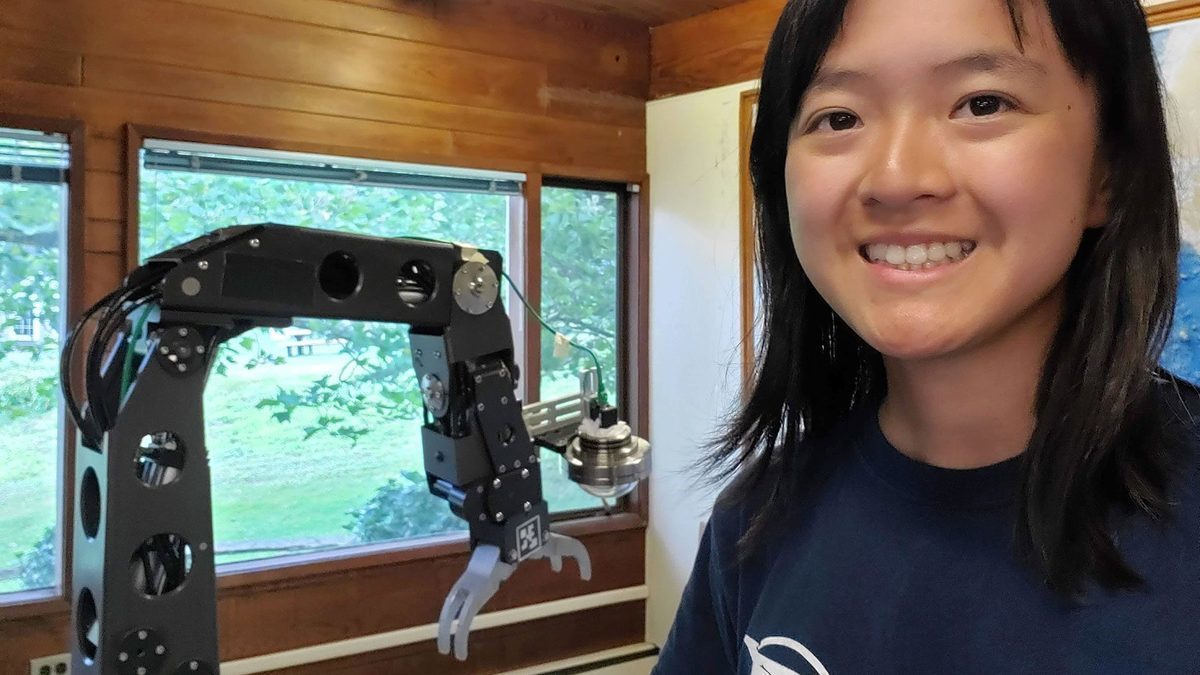

For Phung, virtual reality and robotics are a natural pairing that will accelerate exploration and facilitate human connection, opening up the door to profound new ways of problem-solving. While still a student at Olin College of Engineering, she helped designed a virtual reality control room for ROV pilots at the Monterey Bay Aquarium Research Institute. In 2020, she came to the Woods Hole Oceanographic Institution as an undergraduate summer fellow to work on robotic manipulator arm calibration with scientist Rich Camilli. Now enrolled in the MIT-WHOI Joint Program, Phung is focusing her graduate work on automating those robotic arms. She envisions that one day, scientists will be able to ask robots a question and it will find the samples it needs to answer that question all on its own.

Oceanus: We tend to think of robots and automation in factories. How can they be helpful to marine science? Why does that interest you?

Phung: There’s a lot we don’t know about the ocean, and a remarkable percentage of it remains unmapped and unexplored. Robots have lots of potential for helping us automate some of that exploration, which will provide us with better insight into ocean processes and our impact on them. Having robots that are able to explore these places and identify where we need to intervene will help us make decisions about how to protect the environment.

Oceanus: Tell us about your ongoing research at WHOI and what you demonstrated with Nereid Under Ice during the September 2021 Ocean Exploration cruise?

Phung: I’m really excited by the idea of helping bring these robotic arms to a state where we can trust them to autonomously collect samples. However, making the jump from minimal automation to having the robot understand what samples are worth collecting requires a lot of incremental steps. During this cruise, we were able to have a shore-side scientist specify higher-level objectives such as “pick up the push-core.” Nereid Under Ice could interpret that phrase and translate it into actual arm motions to pick it up. Once the robot had the tool in hand, the scientist could specify a location in a 3D environment, and the robot was able to move the arm to sample that location. In these demonstrations, we relied on human cognition from scientists to determine what to sample, but “handed over the joystick” for controlling the arm to the partially automated system. Collecting a sample with shared autonomy between the robot and the scientists helps get us one step closer to fully automated sampling.

In a September 2021 test with the hybrid remotely operated vehicle (HROV) Nereid Under Ice off the coast of California, Phung was able to operate the vehicle remotely from her apartment in Cambridge, Massachusetts. For safety reasons, a pilot aboard the E/V Nautilus approved each request before the vehicle arm moved. (Credit: Video and VR visualization courtesy of Amy Phung, © Woods Hole Oceanographic Institution)

Oceanus: What was it like to operate Nereid Under Ice remotely? What are the benefits of using virtual reality to control underwater vehicles?

Phung: Through the VR interface, it felt like I was a diver sitting right next to the robot. Honestly, I’m pretty mind-blown by the idea that this enabled me to interact with live data coming off a robot, sitting on the seafloor 4,000 miles away from me, in real time. I can’t believe the technology’s already at this point, and I’m excited to see how it’ll continue to evolve over time.

I’m also excited by the fact that this technology lets us feel like we’re standing in a place we can’t actually get to ourselves. The fact that this interaction can happen anywhere, even somewhere as mundane as my apartment, means there’s potential to share this experience with even more people. We can’t necessarily send everyone to the bottom of the ocean, but this technology can help provide a wider audience a sense of what it’s like down there.

In terms of practical uses, being there, seemingly in person, adds a lot of things you can’t really quantify. Seeing the underwater environment around you gives you a good sense of scale, and I think this has the potential to provide more precise control over sampling locations.

Oceanus: What do you think the future of undersea exploration looks like?

Phung: I envision we’ll reach a point where we’ll be able to ask robots broad questions like, “Is temperature affecting fish species in this region?” A fleet of robots could monitor a particular region, collect samples, and tell you what’s happening.

There’s another parallel to exploring outer space. If we can get robots to the level where they’re independent enough to make their own decisions without human support, that makes it possible to explore oceans that are not on Earth, like on Europa and Enceladus.